The rapid adoption of Artificial Intelligence (AI) is transforming the way businesses operate—streamlining workflows, enhancing user experiences, and pushing automation to new heights. But as AI becomes more embedded in our daily processes, we must confront some critical and immediate challenges. For data professionals, the conversation is shifting from “Can we use AI?” to “Should we?” And more importantly, how do we strike a balance between leveraging AI and safeguarding data privacy in this era of automation and efficiency?

AI Thrives on Data—But at What Cost?

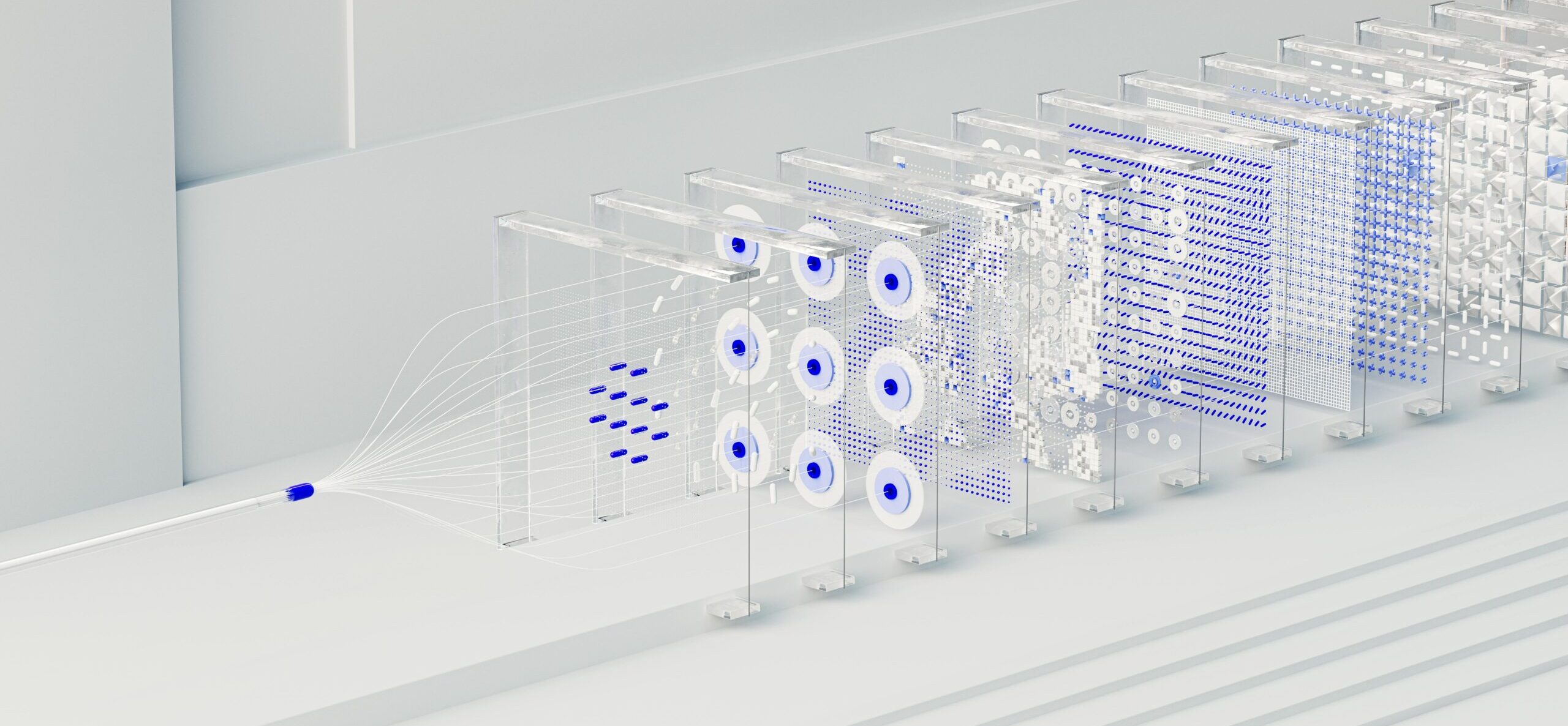

At their core, AI systems thrive on data. From user behaviour to sensitive financial or health information, the more data available, the more powerful and accurate these systems become—especially in machine learning, deep learning, and big data applications.

But this data dependency comes with serious concerns. As AI’s appetite for information grows, so do the risks of data misuse, security breaches, and ethical dilemmas. Often, it’s unclear how the data is processed, who accesses it, or how long it’s retained. Even anonymized data isn’t foolproof.r\Research shows AI can re-identify individuals by cross-referencing datasets.

As we continue advancing in AI, it’s essential to question not just what it can do, but what it should do—particularly when it comes to safeguarding data privacy.

Why Ethics Should Be Built into AI, Not Bolted On

In the past, many organizations have viewed AI ethics as an afterthought—something to address only once the algorithms are up and running. But this reactive approach is both outdated and risky in today’s data-driven world. Ethical considerations must be built into AI systems from the very beginning—not bolted on after deployment.

For professionals handling data, ethical AI requires:

- Transparency: Can we explain how a model makes its decisions?

- Accountability: Who takes responsibility when AI causes harm or bias?

- Data minimisation: Are we collecting only what’s truly necessary?

User trust, client relationships, and compliance with regulators hinge on these questions. Without clear answers, we risk not only losing credibility but also failing to comply with evolving regulations and emerging AI-specific frameworks.

Building Professional Trust in AI Systems

If you’re an active part of a team that is responsible for designing, deploying, or using AI tools, then keep in mind that trust is your most valuable currency. But trust isn’t just about having a secure infrastructure. It’s about creating a data culture that:

- Questions the necessity of data collection.

- Enforces robust access controls and consent mechanisms.

- Audits AI models for bias and ethical blind spots.

Professional trust in AI is not automatic—it has to be earned through action and evidence

What You Can Do Today

From now onward, mark these three practical ways to become a champion in data privacy and ethics in AI within your organisation:

- Educate your team on AI regulations and privacy standards.

- Conduct regular privacy impact assessments on AI projects.

- Advocate for responsible AI use in meetings and decisions, even when it’s inconvenient.

Final thoughts

In this AI age, professionals cannot afford to be passive about data privacy. As stewards of information and innovation, we must ensure that technology serves people—and not the other way around.

Let’s build AI systems we can trust—because privacy isn’t optional.